Adapt then Unlearn: Exploring Parameter Space Semantics for Unlearning in GANs

Piyush Tiwary, Atri Guha, Subhodip Panda, Prathosh A.P.

Indian Institute of Science, Bengaluru, India

Abstract

Owing to the growing concerns about privacy and regulatory compliance, it is desirable to regulate the output of generative models. To that end, the objective of this work is to prevent the generation of outputs containing undesired features from a pre-trained Generative Adversarial Network (GAN) where the underlying training data set is inaccessible. Our approach is inspired by the observation that the parameter space of GANs exhibits meaningful directions that can be leveraged to suppress specific undesired features. However, such directions usually result in the degradation of the quality of generated samples. Our proposed two-stage method, known as 'Adapt-then-Unlearn,' excels at unlearning such undesirable features while also maintaining the quality of generated samples. In the initial stage, we adapt a pre-trained GAN on a set of negative samples (containing undesired features) provided by the user. Subsequently, we train the original pre-trained GAN using positive samples, along with a repulsion regularizer. This regularizer encourages the learned model parameters to move away from the parameters of the adapted model (first stage) while not degrading the generation quality. We provide theoretical insights into the proposed method. To the best of our knowledge, our approach stands as the first method addressing unlearning within the realm of high-fidelity GANs (such as StyleGAN). We validate the effectiveness of our method through comprehensive experiments, encompassing both class-level unlearning on the MNIST and AFHQ dataset and feature-level unlearning tasks on the CelebA-HQ dataset. Our code and implementation is available at: https://github.com/atriguha/Adapt_Unlearn.

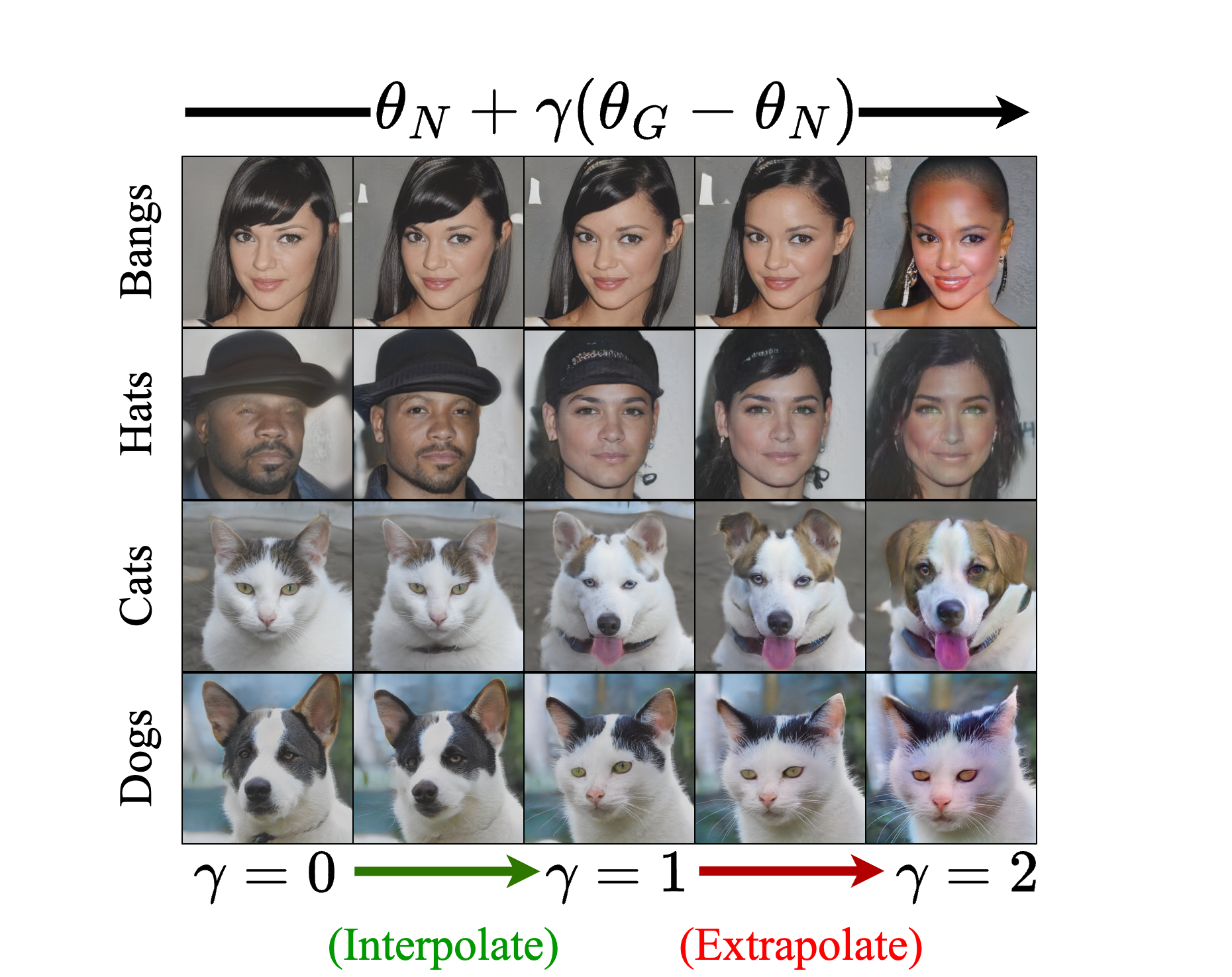

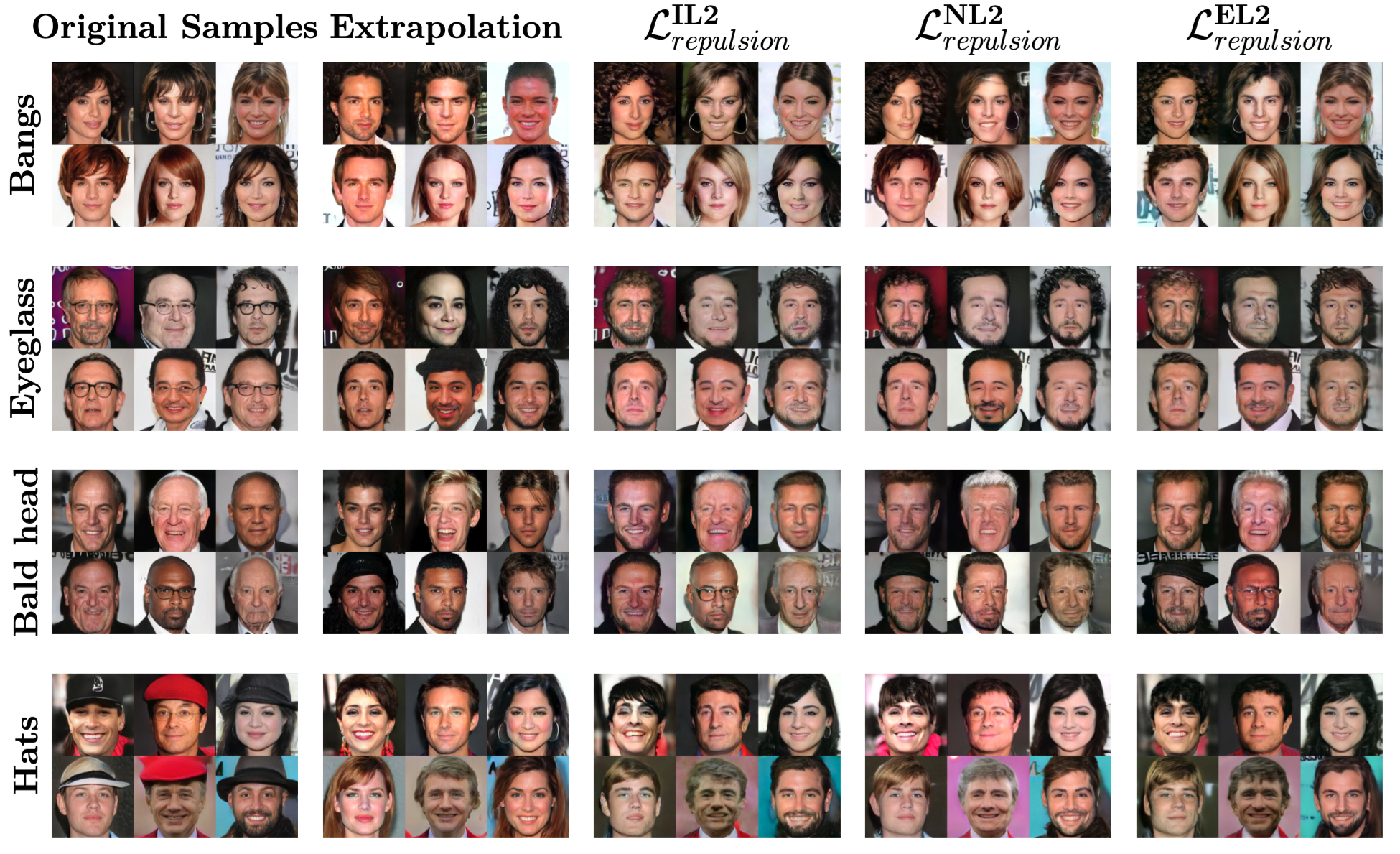

Parameter Space Semantics

Our approach hinges in realizing interpretable and meaningful directions within the parameter space of a pre-trained GAN generator, as discussed in (Cherepkov et al., 2021; Ilharco et al., 2023). In particular, the first stage of the proposed method leads to adapted parameters that exclusively generate negative samples. While the parameters of the original pre-trained generator generate both positive as well as negative samples. Hence, the difference between the parameters of adapted generator and the paramters of original generator can be interpreted as the direction in parameter space that leads to a decrease in the generation of negative samples. Given this, it is sensible to move away from the original parameters in this direction to further reduce the generation of negative samples. This observation is shown in Figure 1. However, it's worth noting that such extrapolation doesn't ensure the preservation of other image features' quality. In fact, deviations too far from the original parameters may hamper the smoothness of the latent space, potentially leading to a deterioration in the overall generation quality (see last column of Figure 1).

Proposed Methodology

Overview of the Proposed Method

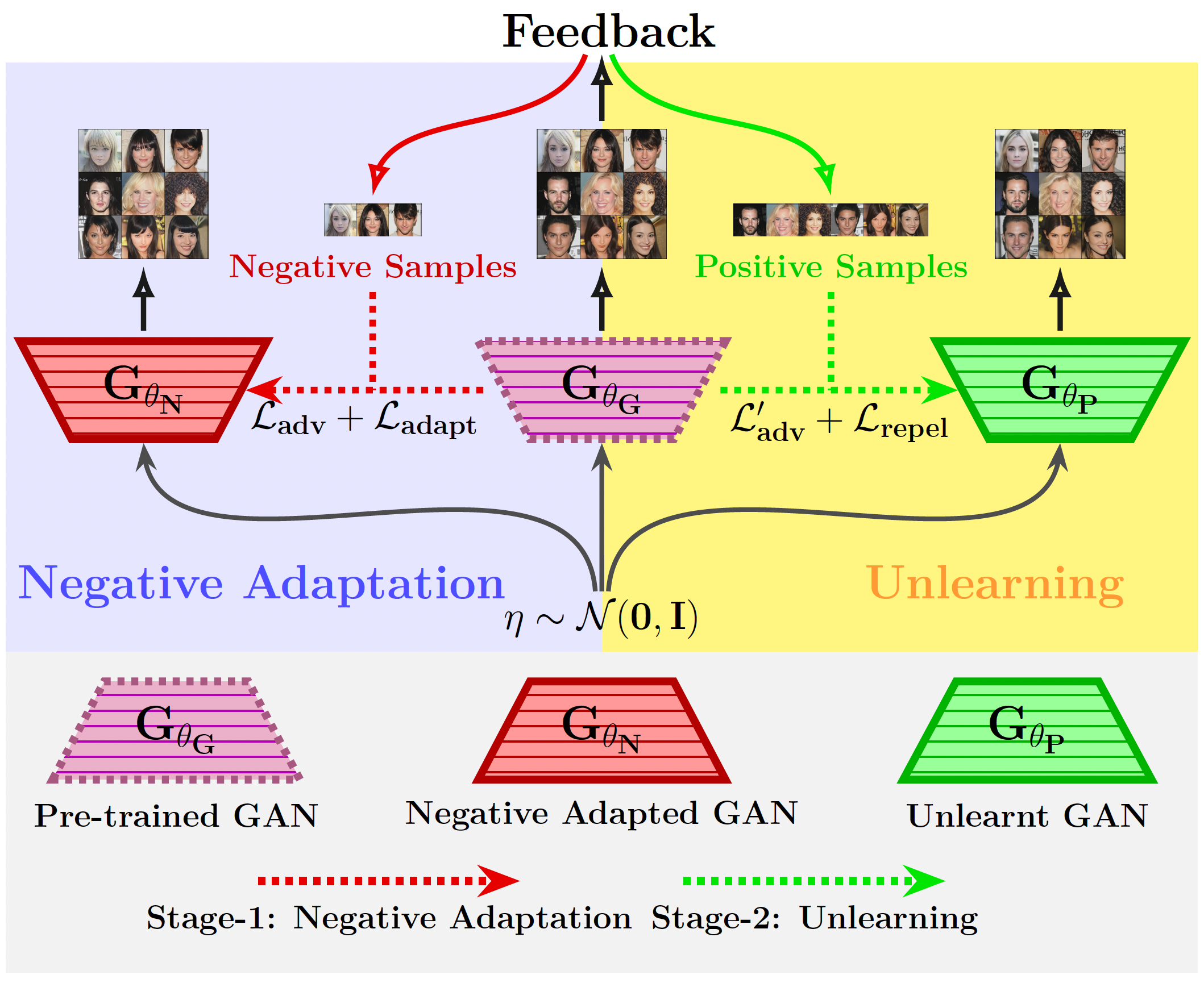

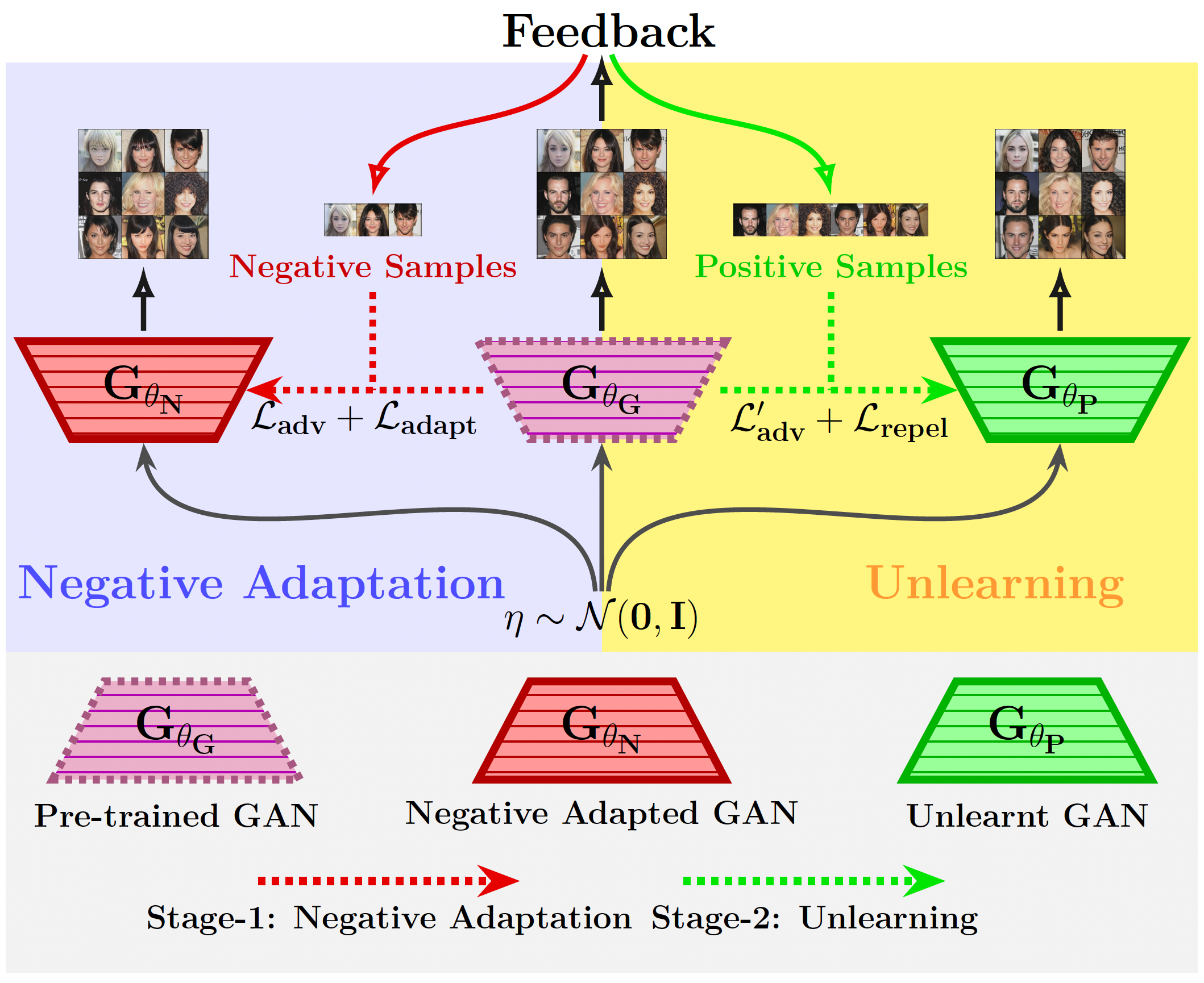

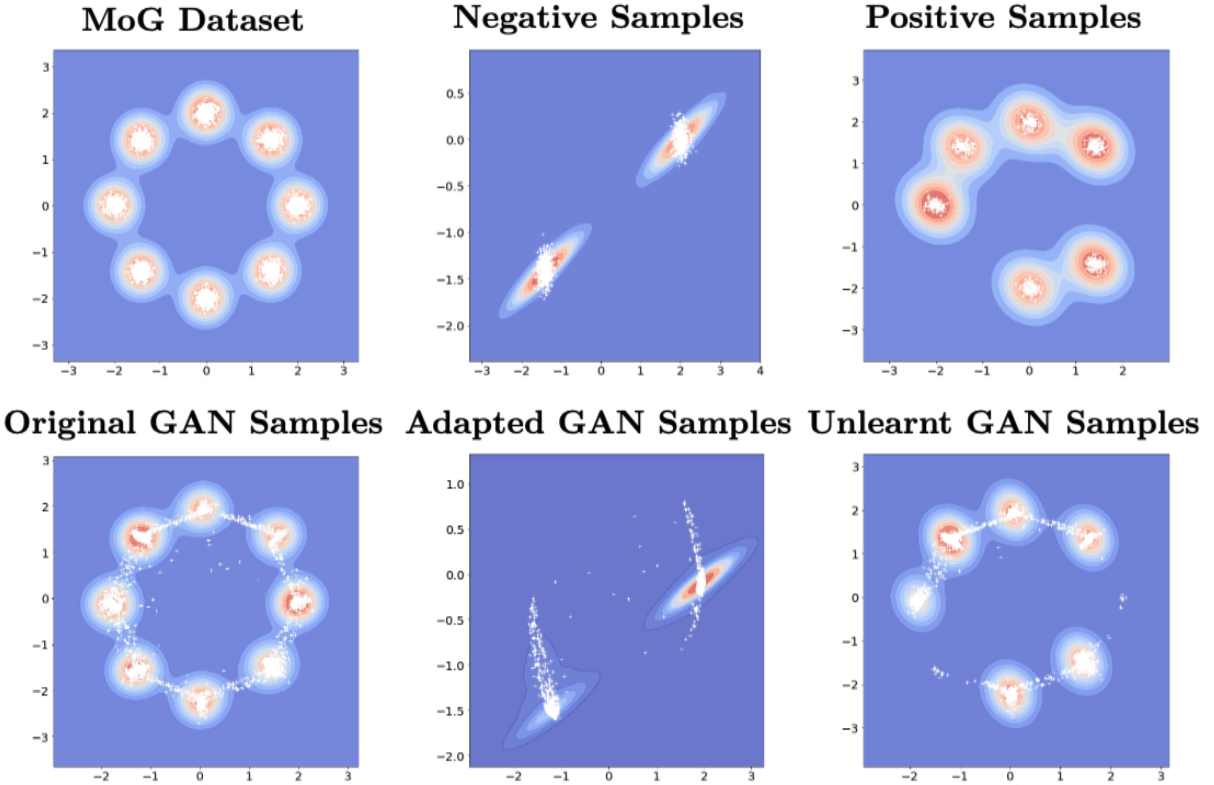

Our proposed method follows a two-stage process:

- Stage 1 - Negative Adaptation: We first adapt the pre-trained GAN (\(\theta_G\)) using a small set of 'negative' samples provided by the user, which contain the undesired features. This results in an adapted GAN (\(\theta_N\)) that primarily generates these negative samples. We use techniques like Elastic Weight Consolidation (EWC) to handle the few-shot nature of this adaptation.

- Stage 2 - Unlearning: Subsequently, we train the original pre-trained GAN using 'positive' samples (those without undesired features). Crucially, we introduce a repulsion regularizer (\(\mathcal{L}_{\text{repulsion}}\)) that encourages the learned model parameters (\(\theta_P\)) to move away from the negative parameters (\(\theta_N\)) obtained in Stage 1, while the standard adversarial loss ensures generation quality is maintained.

Choice of Repulsion Loss

The repulsion loss (\(\mathcal{L}_{\text{repulsion}}\)) should encourage the learned parameter to traverse away from \(\theta_N\) obtained from the negative adaptation stage. In general, one can use any function of \(\|\theta - \theta_N\|_2^2\) that has a global maxima at \(\theta_N\) as a choice for repulsion loss. In this work, we explore three different choices for the repulsion loss: $$ \begin{align} \mathcal{L}_{repulsion} = \begin{cases} \mathcal{L}_{repulsion}^{\text{IL2}} = \frac{1}{||\theta - \theta_N||_2^2} & \text{(Inverse $\ell_2$ loss)} \\ \mathcal{L}_{repulsion}^{\text{NL2}} = - ||\theta - \theta_N||_2^2 & \text{(Negative $\ell_2$ loss)} \\ \mathcal{L}_{repulsion}^{\text{EL2}} = \exp(-\alpha||\theta - \theta_N||_2^2) & \text{(Exponential negative $\ell_2$ loss)} \end{cases} \label{eq:repulsion-loss} \end{align} $$

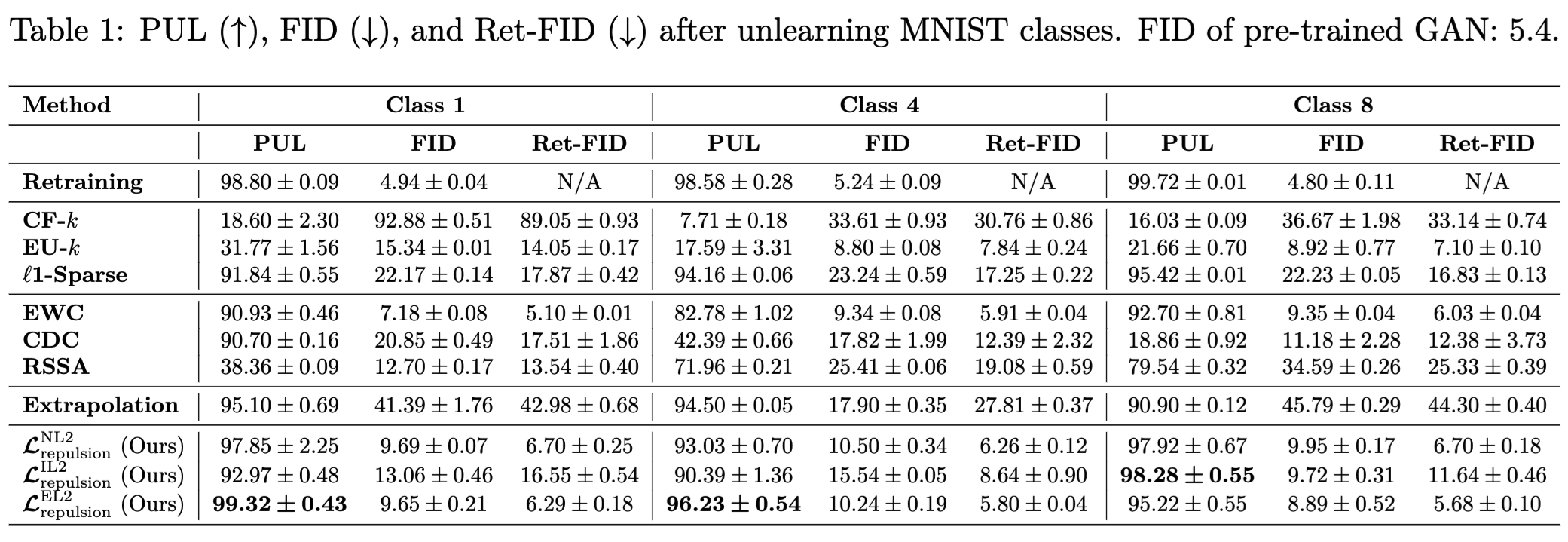

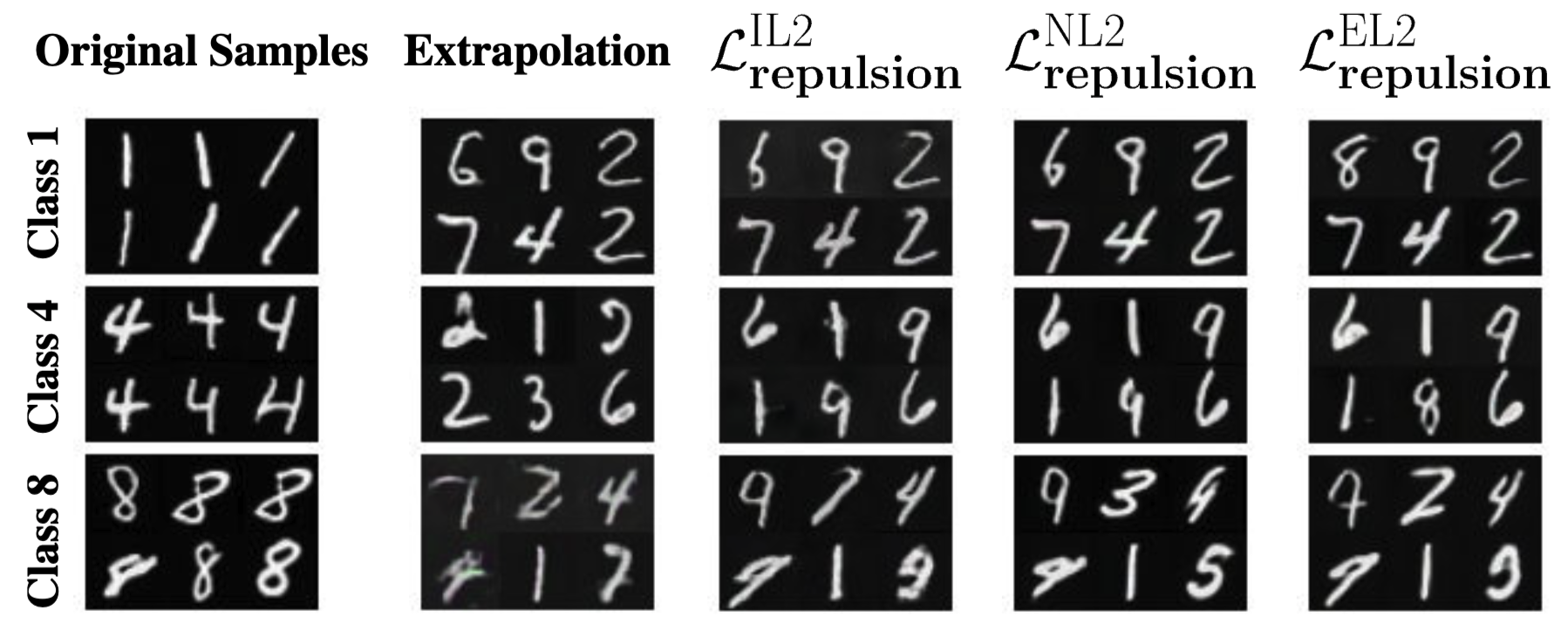

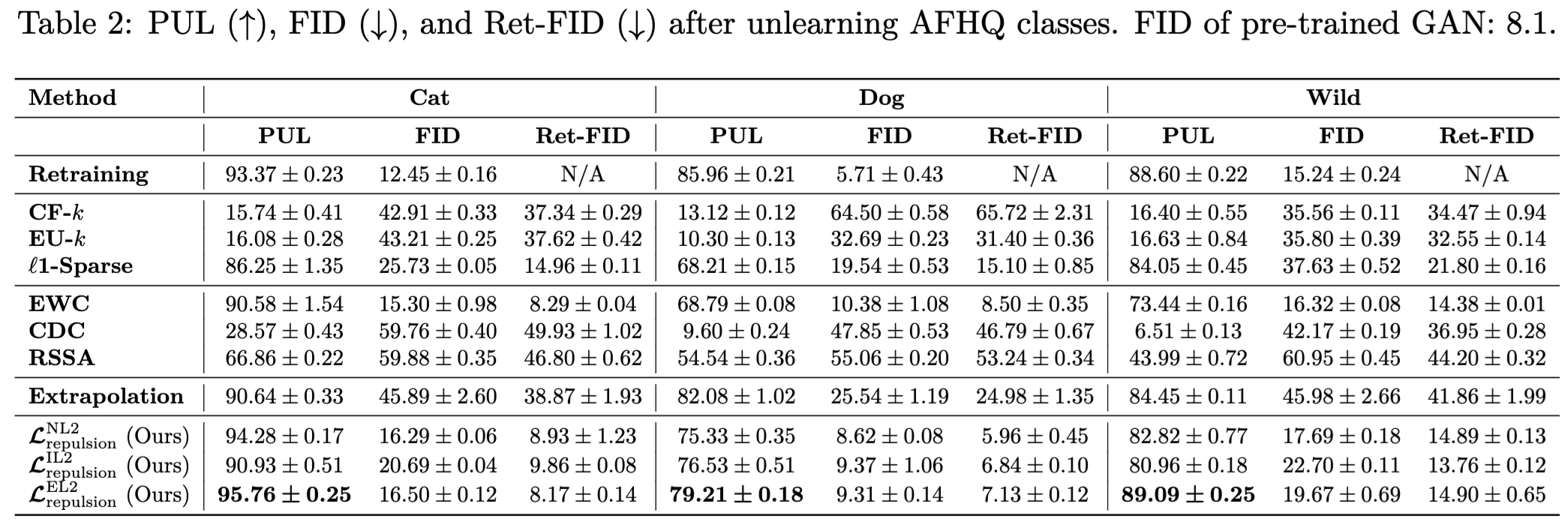

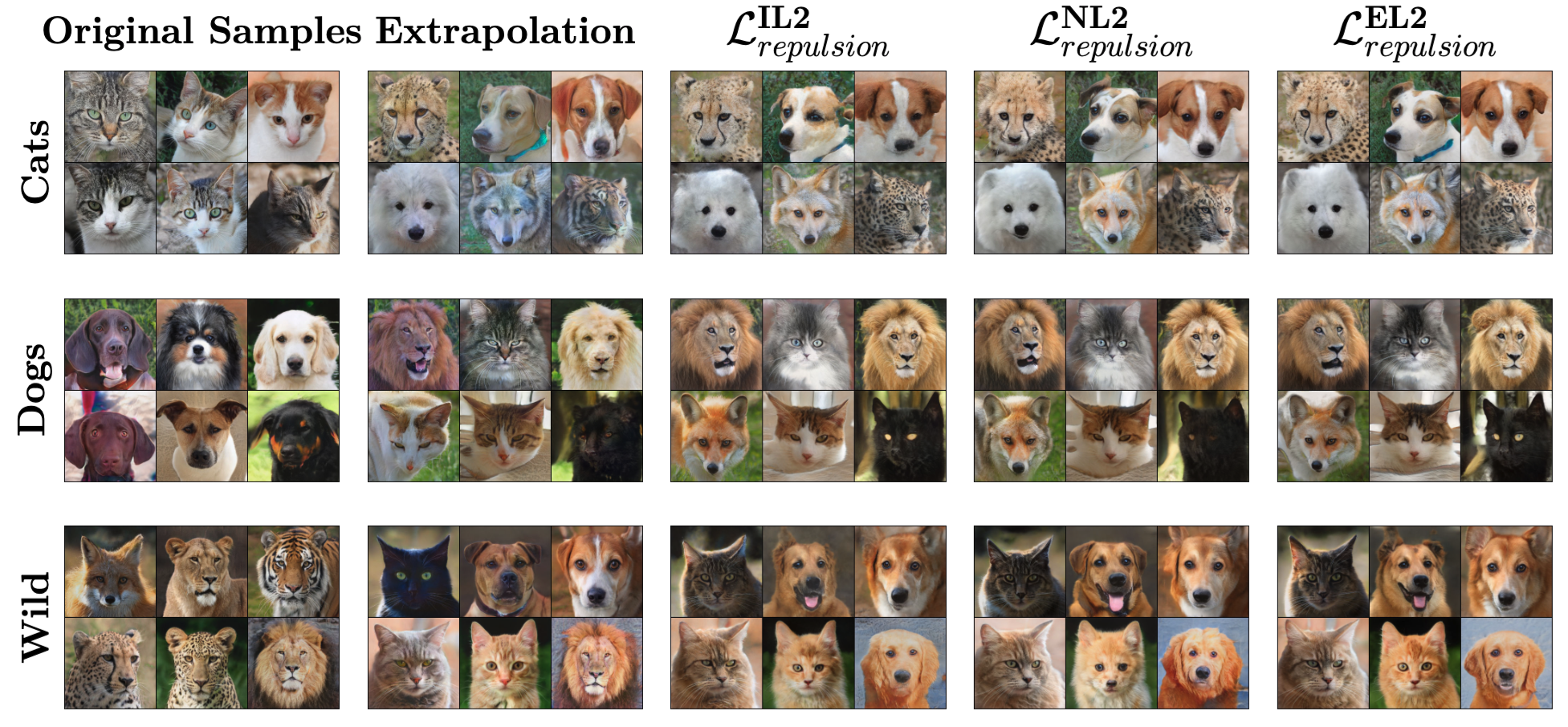

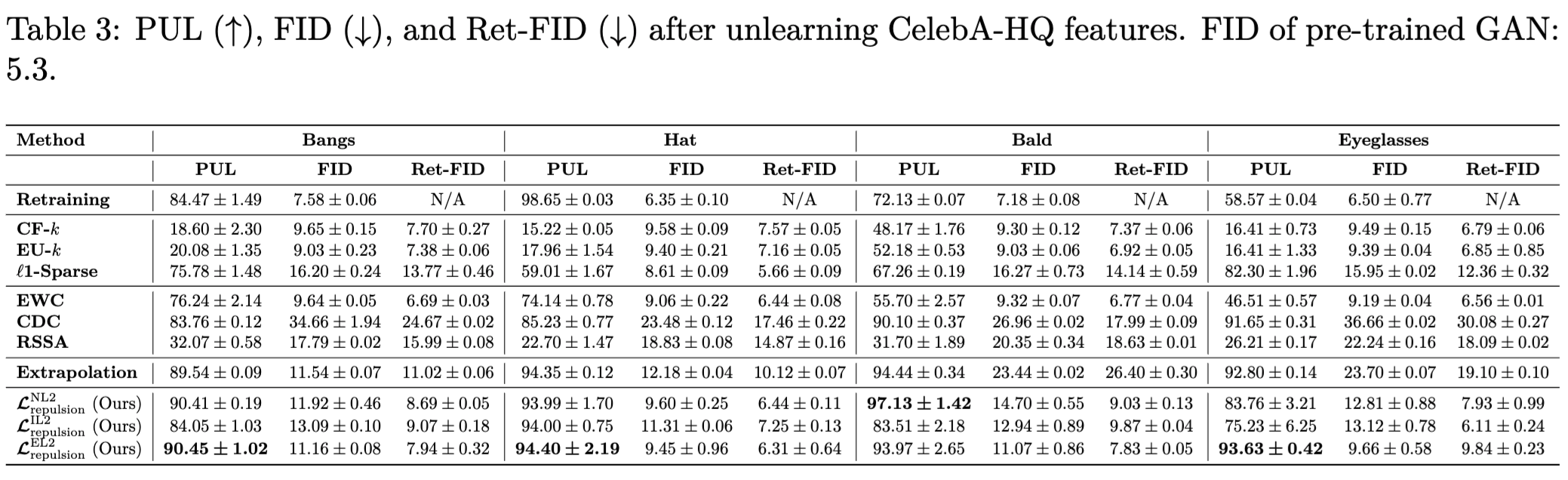

Results

If you find our work useful, please consider citing our paper:

@article{tiwary2025adapt,

title={Adapt then Unlearn: Exploring Parameter Space Semantics for Unlearning in Generative Adversarial Networks},

author={Piyush Tiwary and Atri Guha and Subhodip Panda and Prathosh A.P.},

journal={Transactions on Machine Learning Research},

year={2025},

url={https://openreview.net/forum?id=jAHEBivObO},

note={Published 02/2025}

}

References

2023

- Editing models with task arithmeticIn The Eleventh International Conference on Learning Representations , 2023